Category: NEWSVENDORSOpswat News

The impact of the digital transformation revolution requires organizations to look for more efficient solutions to manage, collect and share information while mitigating data risks and ensuring sensible resource allocation. By the same token, computing infrastructure has also been evolving over the past several decades to meet such demands.

Let’s take a quick trip down memory lane.

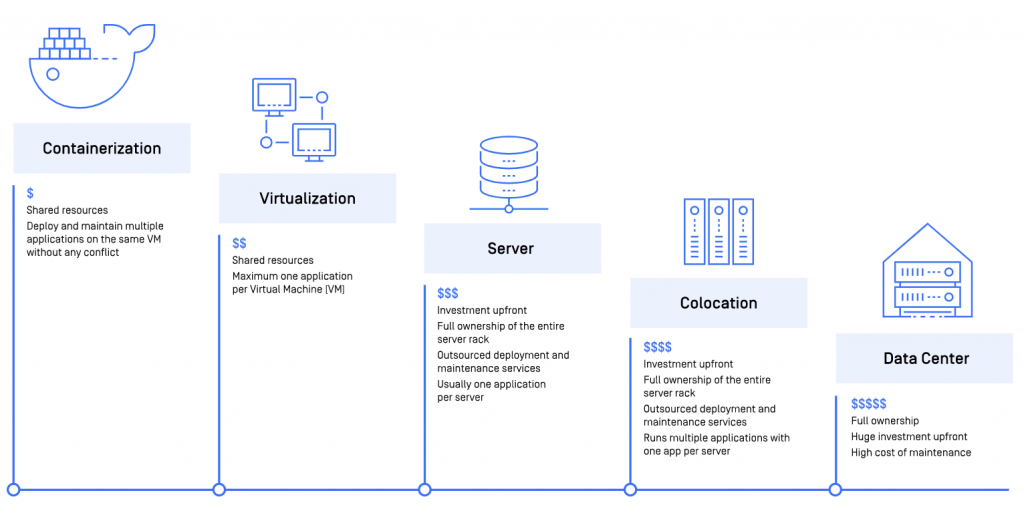

Around 80 years ago during the 1940s, system administrators started to rely on the “legacy infrastructure” like data centers, colocation centers, and servers to house computer systems. However, these types of infrastructure come at the expense of large upfront investments and high monthly maintenance costs.

The networking layer itself was complex and expensive to build. Adding more computing power to scale up was also a huge challenge as it can take at least three to six months just to add one server. Then a list of requirements has to be met: getting budget approval to order the necessary hardware, have the hardware shipped to the data center, schedule a maintenance window to deploy it in the data center, which required rack space, networking configurations, additional loads on power and cooling, lots of recalculation to make sure everything is within the parameters, and many more hurdles.

Getting access alone was already a slow and painful process, not to mention any additional changes to a server require great costs of time and money—whether it was a hardware failure or an upgrade. As a matter of fact, organizations needed a better solution.

It was not until the late 1960s that the next stage of the infrastructure evolution happened: IBM introduced virtualization.

Virtualization is a method of logically distributing system resources between applications in which virtual machines (VMs) serve as a digital version of a physical computer with its own operating systems. VMs can turn one server into many servers. This makes virtualization capable of resolving problems that its predecessor could not address, such as distributing the usage of computer resources among a large group of users, increasing the efficiency of computer capability, improving resource utilization, simplifying data center management, while reducing the costs of technology investment.

This solution was a paradigm shift. Virtualization allows cloud computing to disrupt the entire ecosystem and updating instance configurations can be at anyone’s fingertips. But more importantly, every task can be automated—no human interference is required to provision, deploy, maintain, or rebuild your instances. From a three-to-six-month process to get access to the server to two minutes, this was truly a game-changer.

The more automation that VMs provided, the more developers could focus on building applications, and the less they needed to be concerned with deploying and maintaining the infrastructure. DevOps, CloudOps and DevSecOps teams have been taking over what used to be considered “legacy System Administration roles”.

However, the fact that each VM has its separate operating system image requires even more memory and storage resources. This puts a strain on overhead management. Virtualization also limits the portability of applications, especially in organizations shifting from monolith to microservices architecture.

Fast-forward to 1979, the IT landscape saw the first container technology with Version 7 Unix and the chroot system. But containerization did not prosper until the 2000s.

A container is a standard software unit that packages up code in an application and all its dependencies, allowing the application to be shared, migrated, moved, and reliably executed from one computing environment to another.

Containerization solves many problems of virtualization. Containers take up less space than VMs as container images are typically only tens of megabytes in size; can handle more applications and require fewer VMs and operating systems. Compared to VMs, they are more lightweight, standardized, secure, and less resource-consuming.

A great benefit of a container is that it creates isolation between applications. Isolated environments allow one VM to host even 10 to 20 very different applications or components. Containers package an application with everything you need to run it, including the code, runtime, system tools, system libraries, settings, and so on—making sure applications work uniformly and conflict-free despite the differences in environments. Because the container technology completely separates the applications from the hardware, it makes the entire system highly portable, scalable, and manageable.

As the next generation of software development is moving away from traditional monolithic applications to the microservices model, containerization is here to stay and is our future.

In addition to shifting infrastructure from data centers to containers, organizations are looking to strengthen their cybersecurity solution in the container-based architecture. Leveraging the same concept, OPSWAT enables MetaDefender Core to be deployed via a containerized ecosystem within minutes. MetaDefender Core Container is a flexible deployment option—where you can scale out multiple MetaDefender Core applications, automate and simplify the deployment process, and remove the complexity and ambiguity caused by hidden dependencies.

The lightweight and easy-to-deploy MetaDefender Core Container solution saves overall costs on infrastructure, operation, and maintenance to help you achieve a much lower Total Cost of Ownership (TCO). By automating deployment and removing any environment-specific dependencies, MetaDefender Core Container enables you to focus on what matters most: scrutinize every file for malware and vulnerabilities, sanitize with Deep CDR (Content Disarm and Reconstruction) to prevent zero-day attacks and APT (Advanced Persistent Threats), and protect sensitive information with DLP (Data Loss Prevention) technology.

Category: NEWSVENDORSOpswat News